Main Page: Difference between revisions

| Line 156: | Line 156: | ||

The variation of block processing times was not considered in the above calculations. To see the power of pipeline parallelism, the automobile manufacturing processes illustrates a similar practical situation. The Hyundai Motor Company's production pipelines can produce 3,835 vehicles per day and 1.4M per year. | The variation of block processing times was not considered in the above calculations. To see the power of pipeline parallelism, the automobile manufacturing processes illustrates a similar practical situation. The Hyundai Motor Company's production pipelines can produce 3,835 vehicles per day and 1.4M per year<ref>https://en.wikipedia.org/wiki/List_of_Hyundai_Motor_Company_manufacturing_facilities</ref>. | ||

= References = | = References = | ||

Revision as of 13:41, 18 June 2023

Welcome to Twiki -- A Toichain Wiki

This is the unofficial Toichain wiki site. Content on this site is subject to frequent changes.

Introduction

The blockchain protocol was introduced via a paper entitled "Bitcoin: A peer-to-peer Electronic Cash System" [1] by Satoshi Nakamoto in 2008. Although there were other peer-to-peer file-sharing projects before this time, such as Napster [2] and Bittorrent [3], and other electronic currency attempts, the Bitcoin project demonstrated the strongest staying power compared even to the currently running 23,000+ other blockchains. Unlike the new cryptocurrencies, the Bitcoin is often referred to as "digital gold" for its scarcity, there are only 21 million hard-coded Bitcoins in the protocol. Other protocols (and associated cryptos) have different token supply amount and distribution policies.

The most attractive common blockchain features are the decentralized consensus-driven decision making process and step-by-step cryptographic proving system. In addition to cryptocurrencies, there are many applications that can benefit from such a secure store of value with reliable transaction ledger without any third-party involvements.

Before trying to understand the Blockchain protocol and its variants, it is important to understand the differences between an algorithm and a protocol.

In general, an algorithm is a program that runs on a single computer solving a single problem. A protocol, however, is a program that runs on all computers in parallel solving a single problem. An algorithm must be transformed to run on all computers in parallel solving the same problem before it to be called a protocol. Therefore, protocol development is significantly more difficult than algorithms.

Consensus Protocols

A good consensus protocol in a blockchain system has at least four properties:

- Fault tolerance to defend failures caused by natural disasters and human hacks or accidents

- Byzantine failure tolerance to defend exploitation by colluding parties

- Centralization avoidance to prevent corruptions in the decision making process

- Source code tamper resistance to automatically reject malicious attacks

Without assuming all nodes active at all times, the blockchain rely on "gossip" protocol to communicate with other nodes. There are two popular types of consensus protocols: Proof of Work (POW) [4] or Proof of Stake (POS) [5]. Others include Proof of Possession(POP)[6], Proof of History(POH)[7]. The ultimate goal is to eliminate double-spending, even if some nodes maybe tampered to attack the integrity of the network, since the protocols are Open Source.

The Bitcoin network uses POW that forces participating miners to compete for the fastest hashing rate by solving a compute intensive cryptographic puzzle. Although it withstood more than 14 year of battle ground tests, it has suffered criticism on escalating electricity consumption [8]. The POH network Solana suffered multiple single-point-failure incidents [9]. All protocols are susceptible to 51% attacks [10].

Since 2009, Bitcoin has been proven the strongest against the common hacks [11]. As the POW puzzle difficulty has increased dramatically over the years, the probability of 51% attack on the Bitcoin network becomes more difficult. POS protocols can save more than 90% energy consumption compared to POW chains. According to Forbes, there are 80 cryptocurrencies use POS protocols at the time of this writing.

There are approximately 1,000 blockchains in four types: public, private, consortium, and permissioned [12].

Cryptographic Proofs

The Blockchain protocol relies on UTXO (unspent transaction output) model and lock-step cryptographic proofs to authenticate every transaction. Once spent, the UTXO is destroyed. Double-spending is theoretically impossible. Each proof step must be executed on a script engine running the same protocol on all nodes. The concurrently produced results are confirmed by the consensus protocol mentioned above and committed to the chain.

Blockchain Trilemma

Blockchain protocols have three common aspects: security, decentralization and scalability -- the three terms that do not have precise definitions but intuitively understood [13]. To date, the existing cryptographic tools have been proven effective for transaction security before the introduction of smart contracts. Decentralization is given by the nature of the protocols. In theory, there should be no service downtimes unless the entire network is down and all nodes crash at the same time. The scalability challenge eludes to deliverable performance and transaction finality which remained unclear.

The trilemma was first introduced by Vitalik Buterin[14], one of the co-founders of Ethereum network. The problems are real. There seems no obvious solutions to accomplish all three goals using known methods. Therefore, all proposed solutions revolve around reducing "opportunity costs" focusing only on partial goals.

Treating the blockchain network as an infrastructure for transaction processing, one would find the trilemma was only an extension of the legacy infrastructure scaling performance and reliability dilemma, where "infrastructure reliability" also does not have a commonly accepted definition. Fortunately all these metrics, although important, are non-functional. Every proposed solution can be a feasible solution with varying "opportunity costs". There was also no obvious solutions to overcome the dilemma.

Statistic Multiplexed Computing (SMC)

The principle of using unreliable components to build arbitrarily reliable system was first lectured by von Neumann in early fifties[15]. The idea was to use probabilistic logic to quantify the arbitrary reliability of a system by multiplexing all components. This theory was applied to the construction of Internet protocols to deliver the "best effort" reliability. Incidentally, the best effort reliability also enabled "best effort" performance that in turn enabled the unlimited scalability of the Internet. For the emerging web3.0 technologies, it is important to apply the same methodology to both centralized and decentralized computing for seamless technology migration and integration.

Definitions

Infrastructure scalability does not have a commonly accepted definition. It helps to define the challenging metrics more precisely before the infrastructure protocol design. In the following, for definitions #1 and #2, the word "infrastructure" implies all applications running on the infrastructure.

- Infrastructure Reliability: MTBF (mean time between failure) =

- Infrastructure Performance:

- Infrastructure Scalability: Expanding the infrastructure in number of processors, networks or storage should have incrementally better impacts on reliability and performance.

- Record Immutability: For transaction ledgers, all records and changes are final. This is required for blockchain ledger infrastructures.

Protocol Design and Proofs

Before building a robust protocol for infrastructure computing, there are well-known impossibilities and possibilities in physics and computer science that must be thoroughly examined for extreme cases.

The first challenge is infrastructure reliability. A computing infrastructure must deploy multiple components including computing, networking and storage. An infrastructure protocol defines how applications can compute, communicate and store results. A formal proof was found that it is impossible for reliable communication in the face of arbitrary crashes [16]. The laws of physics dictate component reliability [17] that 100% component reliability is not possible. These facts demands the infrastructure protocol (program-program communication protocol) to provide complete component (processing, networking and storage) decoupling from application program logics. This decoupling requirement forces the protocol to exploit "decentralized" or "stateless" components that the legacy infrastructure protocols, such as message passing interface (MPI), remote procedure call (RPC) and remote method invocation (RMI), cannot handle. The blockchain protocol is such an example of high availability protocol.

Quantifying infrastructure reliability can be traced back to the computer pioneer von Neumann. In addition to his contribution to computer architecture, von Neumann also penned a study on building reliable system using unreliable components using probabilistic logics in early fifties [18]. The Internet was built using statistic multiplexed communication protocols. Probabilistic logic is the only possible definition for infrastructure reliability. In literature, this is referred to as the "best effort" reliability. Ironically, this best effort reliability also enabled the Internet's infinite scalability -- the benefit we enjoy now and into the future.

The Bitcoin protocol exploits multiple anonymous decoupled (or decentralized) computers connected through the Internet protocol. Judging from the Bitcoin network's reliability to date [19], von Neumann's theory seems working well.

The second challenge is infrastructure performance. Since the infrastructure must deploy multiple computing, networking and storage components, unless all components can be fully exploited in any scale, incremental performance delivery is impossible. Thus, von Neumann's statistic multiplexing theory must also apply here. The result is a new computing paradigm: Statistic Multiplexed Computing (SMC). The existing blockchain protocols fall short.

Infrastructure scalability challenge is automatically resolved once the performance challenge is resolved without negatively impacting reliability -- "best effort" performance" meets "best effort reliability".

However, monotonically increasing performance may reach some theoretical limits. Thus, the only remaining challenge is Finality -- can any blockchain protocol design practically deliver true ledger finality in perpetuity?

Theoretically, only resources fully statistic multiplexed blockchain protocol can meet the scalability challenge. The fixed chain "sharding" or "Danksharding" [20] will only increase protocol development complexity with marginal performance gains.

Scalability Proof

The proof makes two assumptions:

- The computation problem size can expand indefinitely, since every useful infrastructure is expected to solve bigger problems.

- There are infinite supplies of computing, networking and storage components in time.

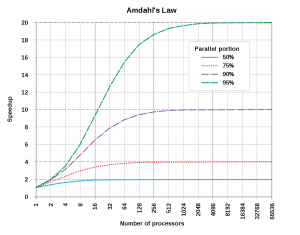

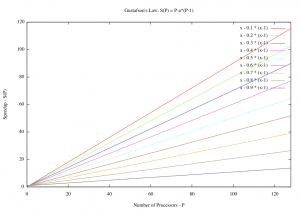

A formal proof requires a parallel computing model, when taking the number of components to infinity, can yield the maximal performance limit under the assumptions. There are two such models: Amdahl's Law [21] and Gustafson's Law [22]. The problem is that these two "laws" seem to yield complete opposite performance predictions.

The Amdahl's Law seems to predict parallel performances following the economic law of diminishing of returns while Gustafson's Law seems to lead infinite speedups. Gustafson's Law was used to argue for Massively Parallel Computing (MPC) experiments and Amdahl's Law was used to argue for bigger supercomputer constructions. In the last three decades, these laws have been used to build parallel computing systems and multi-processor operating systems and computing clouds.

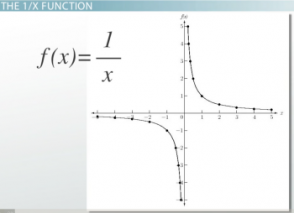

A careful examination revealed that the two laws are mathematically dependent [23] where Amdahl's formulation has a tight peak performance bound when taking the number of parallel processors to infinity, where x = the percentage of serial portion of instructions in a program. This means that the peak performance of every parallel program is above-bounded to , when , the performance will asymptotically approach to , when the speedup will approach 1. Thus, if the computing problem's size is fixed, x will eventually approach to 1 as the number of processors increases -- economic law of diminishing of return. If the problem size is open (solving bigger problems), then increasing the number of processors will force x to approach 0. The challenge is that quantifying for any practical parallel program is very hard. Gustafson's Law was derived from running actual parallel programs. It can be easily used to quantify in Amdahl's Law for any practical parallel program.

This model can be validated in practice when developers simply increasing the problem size to use more parallel processors.

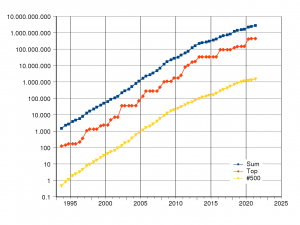

A classical example is the TOP500 supercomputer benchmarks (linear solvers) that have open problem sizes for all manufacturers to compete in the world's fastest supercomputer race [24] .

In practical blockchain applications, the number of transactions to be processed will increase until it satisfies all possible requests. The ledger storage will continue to grow. In other words, the available computing, networking and storage components can cover the needs under proper incentives. The infrastructure no longer has the structural deficiency.

Conclusion

These discussions can conclude that in theory, a statistic multiplexed blockchain protocol can deliver infinitely scalable infrastructure in performance and reliability. Leveraging blockchain's data and processing authenticity guarantee via the lock-step cryptographic proofs, infrastructure security is also feasible.

This surprising result is also applicable to ledger storage. Without multiplexing, the blockchain ledger storage will eventually saturate all nodes causing the chain to collapse. The SMC blockchain protocol promises to hold the true finality of all transactions in perpetuity.

These discussions follow the inductive logic of the Law of Large Numbers [25]. It is not possible to make the same arguments for other existing blockchain protocols.

There are more details for the protocol implementation, especially for Open Source public chains. The protocol implementation must be code tamper resistant, natural disaster and human accident resistant. A secure and performant decentralized consensus protocol is needed.

SMC Proof of Stake Protocol

Existing POS protocol designs revolved around staking policies and randomness of the selection algorithm. Considering infrastructure scalability will add much complexity to the design. The examples include chain sharding and Danksharding [26].

The challenge is complex -- we must deliver very high performance without compromising security and reliability of the network.

Technically, transaction replication and parallel processing were considered dangerous [27]. The blockchain protocol resolved the double-spending problem. It did not solve the parallel processing problem.

According to Professor Michael Flynn, there are three high-level parallel processing topologies: SIMD, MIMD, and pipeline [28]. However, for eliminating double-spending, all transactions must be serialized, since each block may contain transactions affecting transactions in multiple other blocks. "Race conditions" and non-deterministic results are not allowed. The only possible topology entry is pipeline. This means we must have a way to form a validation pipeline for transaction blocks.

The problem is that the nature of the blockchain protocol is that all nodes run independently in parallel with little communication with other nodes via "gossip" protocols. It is far easier to force all nodes store the entire ledger than forcing them to form any pipeline and store partial chain data.

ACAN (Active Content Addressable Network) [U.S. and Other Patents Pending]

ACAN is a high-level data communication protocol that completely decouples all hardware components from communicating programs. The need for complete component decoupling was implemented in the original blockchain protocol via "gossip" protocol. This delivered blockchain's high availability and security. The "gossip" implementation is insufficient for complex parallel processing signaling.

ACAN supports three simple APIs:

- read(&key, &value): key holds a search pattern in a "tuple space" where all nodes are connected. value holds the matched tuple, if any. This is a blocking call that a requesting program will be blocked until a pattern-matched tuple is found. The matching tuple remains in the "tuple space".

- get(&key, &value): Same as the "read()" API, except that the matching tuple is removed from the "tuple space".

- put(key, value): Inserts <key, value> into the "tuple space".

It is relatively easy to convert "gossip" protocol to ACAN in existing blockchain protocols. With ACAN, all three parallel processing topologies, pipeline, SIMD and MIMD will form dynamically at runtime without violating the serialization needs of transaction block validation.

The highly parallel nature of the blockchain protocol will automatically implement the SMC principle in real time.

The critical feature of this dynamic SMC pipelined architecture is the infinite scaling feature.

The speed up of a pipeline can be represented as: , where p = number of processors in the pipeline, b = total blocks to be processed. Take the number of blocks to infinity:

This means that as long as there are enough blocks to be processed, the entire chain's performance will be abound-bounded to the number of nodes in the pipeline.

The variation of block processing times was not considered in the above calculations. To see the power of pipeline parallelism, the automobile manufacturing processes illustrates a similar practical situation. The Hyundai Motor Company's production pipelines can produce 3,835 vehicles per day and 1.4M per year[29].

References

- ↑ https://bitcoin.org/bitcoin.pdf

- ↑ https://www.napster.com/us

- ↑ https://www.bittorrent.com/

- ↑ https://en.wikipedia.org/wiki/Proof_of_work

- ↑ https://www.investopedia.com/terms/p/proof-stake-pos.asp

- ↑ https://identityserver4.readthedocs.io/en/latest/topics/pop.html

- ↑ https://www.infoworld.com/article/3666736/solana-blockchain-and-the-proof-of-history.html

- ↑ https://www.forbes.com/advisor/investing/cryptocurrency/bitcoins-energy-usage-explained/

- ↑ https://messari.io/report/solana-analyzing-downtimes-statistics-and-ecosystem-development

- ↑ https://originstamp.com/blog/has-there-ever-been-a-51-attack-on-bitcoin/#notable-51-attacks

- ↑ https://www.theguardian.com/technology/2014/mar/18/history-of-bitcoin-hacks-alternative-currency

- ↑ https://increditools.com/blockchains/

- ↑ https://sensoriumxr.com/articles/what-is-the-blockchain-trilemma

- ↑ https://en.wikipedia.org/wiki/Vitalik_Buterin

- ↑ https://static.ias.edu/pitp/archive/2012files/Probabilistic_Logics.pdf

- ↑ https://groups.csail.mit.edu/tds/papers/Lynch/jacm93.pdf

- ↑ Encyclopedia of Physical Science and Technology, Editor-in-Chief Robert A. Meyers, ISBN 978-0-12-227410-7, 2001

- ↑ https://static.ias.edu/pitp/archive/2012files/Probabilistic_Logics.pdf

- ↑ https://buybitcoinworldwide.com/bitcoin-downtime/

- ↑ https://ethereum.org/en/roadmap/danksharding/

- ↑ https://en.wikipedia.org/wiki/Amdahl%27s_law

- ↑ https://en.wikipedia.org/wiki/Gustafson%27s_law

- ↑ https://cis.temple.edu/~shi/wwwroot/shi/public_html/docs/amdahl/amdahl.html

- ↑ https://www.top500.org/

- ↑ https://www.sciencedirect.com/topics/mathematics/laws-of-large-number

- ↑ https://ethereum.org/en/roadmap/danksharding/

- ↑ https://pages.cs.wisc.edu/~yxy/cs764-f20/papers/replication.pdf

- ↑ https://link.springer.com/referenceworkentry/10.1007/978-0-387-09766-4_2

- ↑ https://en.wikipedia.org/wiki/List_of_Hyundai_Motor_Company_manufacturing_facilities

Consult the User's Guide for information on using the wiki software.