SMC

Introduction

SMC stands for Statistic Multiplexed Computing and Communication. The idea was inspired by von Neumann's 1952 paper on probabilistic logics and synthesis of reliable organisms using unreliable components [1]. Building fault tolerant mission critical system was the triggering use case.

Modern computer applications must rely on networking services. Every networked application forms its own infrastructure consisting of network, processing, storage and software components. A data center or cloud service can duplex multiple applications on the same hardware. The definition of infrastructure remains with each application.

The challenge of building fault tolerant mission critical application is deciding how the programs should communicate with other programs and devices.

Since every hardware component has finite service life, in theory, mission critical software programs must be completely decoupled from hardware components, such as networking, processing, storage, sensors and other devices. This decoupling affords hardware management freedom without changing programs.

This simple requirement seems very difficult to satisfy in practice.

Canary In The Coal Mine

The TCP/IP protocol was an experimental protocol for connecting arbitrary two computers reliably in the world-wide network like the "canary in a coal mine". The OSI model [2] is a conceptual model from the ISO (International Organization for Standardization) that provides "a common basis for the coordination of standards development for the purpose of systems interconnection [3]. Today, its 7-layer model is widely taught in universities and technology schools.

For every connection-oriented socket circuit, all commercial implementations guarantees "order-preserved lossless data communication", packet losses, duplicates and errors are automatically corrected in the lower layer protocols. It seems perfectly reasonable to deploy this "hop-to-hop" connected-oriented TCP sockets for mission critical applications.

Unfortunately, the fine-prints in the OSI model specification have not caught any attention. The "order-preserved lossless data communication" is guaranteed only if both the sender and receiver are 100% reliable. If the sender or the receiver can crash arbitrarily, then reliable communication is impossible [4]. In practice, we call the TCP/IP protocol the "best effort" reliable protocol for all nodes are indeed try their best to forward packets and repair errors and duplicates. Building mission critical application using these protocols directly is inappropriate.

The problem is that it is impossible to eliminate single-point failures. The "canary" worked well only for communication applications where data losses and service downtimes can be tolerated. For mission critical applications, such as banking, trading, air/sea/land navigation, service crashes can cause irreparable damages and loss of human lives.

Even for banking applications, arbitrary transaction losses cannot be eliminated completely. Banks resort to legal measures to compensate verified customer losses. There are unclaimed funds to be handled by the banks.

In business, the IT professionals simply try to minimize "opportunity costs". Solving the infrastructure scaling problem seems beyond our reach.

Fruits of Poisonous Tree

The "hop-to-hop" protocol is the DNA of existing enterprise system program paradigms. These include RPC (remote procedure call), MPI (message passing interface), RMI (remote method invocation), distributed share-memory and many others. These are the only means to communicate and retrieve data from a remote computer.

Applications built using these protocols form infrastructures that have many single-point failures. Every such point failure can bring down the entire enterprise.

The "Achilles Heel" of the legacy enterprise programming paradigms is the lack of "re-transmission discipline". The hop-to-hop protocols provide false hope of data communication reliability. Programmers are at a loss when dealing with timeout events: do we retransmit or not retransmit? If not, data would be lost. If we do, how to handle if duplicates? what is the probability of success from the last timeout?

To eliminate infrastructure single-point failures requires complete program and data decoupling from hardware components -- a programming paradigm shift from the hop-to-hop communication paradigms.

Active Content Addressable Networking (ACAN)

Content addressable networks are peer-to-peer(P2P) networks. There are many proposed contend addressable networks (CAN) [5]. These include distributed hash table (DHT)[6], and projects: Chord[7], Pastry[8] and Tapestry[9]. These networks focused on information retrieval efficiency.

We need information retrieval efficiency and the freedom of multiplexing hardware components in real time. We call this ACAN (Active Content Addressable Networking).

The idea of ACAN is to enable automated parallel processing based on application's data retrieval patterns without explicitly building parallel SIMD, MIMD and pipeline clusters. This was inspired by the failures from the early-day dataflow machine research [10]. The earlier dataflow computer research failed to recognize the importance of parallel processing granularity[11]. The meticulously designed multiprocessor architectures failed to compete against simpler single processors.

The parallel processing granularity optimization problem needs to balance the disparity of processing and communication capabilities in an infrastructure. Conventional wisdom considers communication overheads are the main challenge in parallel computing. In reality, synchronization overheads are the main problem, unless computing speeds are perfectly matched with the communication latencies, optimal parallel performance is not possible. This situation may be visualized as the fastest crossing a muddy area and a hard surface area where the straight line is obviously not optimal:

Depending upon the resistances, the optimal point can be anywhere along the interface between the two medium.

The ancient Brachistochrone problem [12] models this problem in physics, a puzzle solved by Johnann Bernoulli [13]. The solution is to optimize between two counter-acting forces: gravity and normal force when the gravity changes continuously.

For parallel computing, depending on the loads and system scheduling policies, the deliverable computing and communication speeds change dynamically. Precise measures are not possible. Finding the optimal granularity requires the program-to-program communication protocol to allow granularity changes without re-compiling the source programs. Furthermore, the load fluctuations in parallel processing also include potential component failures. The peer-to-peer ACAN protocol was designed to fit both needs: load balancing and fault tolerance.

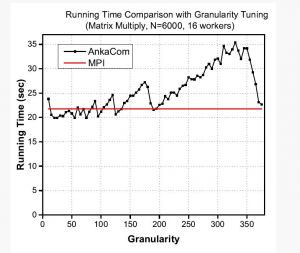

On surface, parallel computing using P2P network will be slower than direct hop-to-hop networks, such as MPI -- the commonly used TOP500 supercomputer benchmark protocol. The problem is that parallel program built using hop-to-hop protocol such as MPI require explicit partitioning factor programmed before compilation. Changing granularity require re-programming. Tuning for the optimal granularity would be exceedingly laborious.

ACAN used the Tuple Space abstraction for automated data-parallel computing [14].

Computing experiments demonstrated that optimal granularity tuning using ACAN can out-perform fixed partitioned MPI program consistently at multiple optimal points[15].

The optimal granularities (lowest points on the black-line in the above figure) share the same property as the points on the Brachistochrone curve where all points will have the same shortest traversal time regardless the starting positions on the curve [src="https://www.youtube.com/embed/skvnj67YGmw?start=1354"].

In the parallel computing experiments, the communication capacity is changing by tuning the granularity at regular intervals (smaller granularity has more frequent communication sessions thus more overheads, larger granularity has less communication sessions but larger synchronization overheads).

Similar experiments were conducted on ACAN storage performance against the Hadoop distributed file system [16].

Programs in ACAN are "stateless" programs that can run on any computer in ACAN. Therefore the ACAN infrastructure will have no single-point failures regardless the scale. The ACAN infrastructure can be repaired or resized in real time without interrupting service. Real time granularity change is also possible without interrupting services.

A more remarkable feature of ACAN applications is the ability to deliver unbounded performance as the infrastructure expands in size with open problem sizes. It is theoretically capable to harness multiple quantum computers.

Blockchain Protocols

Blockchain protocol also forms P2P network. Although there are different consensus protocols, such as POW (proof of work), POS (proof of stake), POH (proof of history) and POP (proof of possession), etc., the general architecture remains the same: the protocol runs on all computers. Each computer validates transactions subject to the consensus protocol for finality. Once committed to the cryptographically linked chain, the transactions are immutable. The consensus protocol ensures double-spending does not happen.

POW protocol is not environmental friendly that consumes too much electricity for solving the mining puzzles. Other consensus protocols are more energy efficient but all suffer the same scaling challenges: expanding the network and growing the ledger will bring down the network performance gradually. The storage requirements are monotonically increasing. The network will eventually crash when all nodes are storage saturated. Dividing the chain into multiple "shards" can only postpone the eventual saturation at the expense of increased protocol complexity.

The blockchain protocols avoided the hop-to-hop protocol trap by using various "gossip" protocols. In theory, there should be no single-point failure in any blockchain network and applications. Therefore, if it is not for protocol bugs, there should never be a service downtime. The Bitcoin network demonstrated such an excellent uptime record[17] that no legacy infrastructures could match.

Another remarkable feature of the Bitcoin network is that its protocol programs are Open Source. Anyone can download and manipulate it and turn it to attack the network. There has been no successful Bitcoin network attacks since its 2009 launch.

It is also interesting to observe that the blockchain protocols are silent on transaction re-transmission discipline. The blockchain wallets are responsible to check their own transactions' status and retransmit if necessary. Since double-spending is not possible, retransmitting a transaction is a trivial matter and it would most likely find a different route for transaction approval.

However, scalability remains the common challenge to all blockchain protocols. For POW consensus chains, the increasing energy consumption caused serious sustainability concerns. Since the cryptographic proved ledgers are immutable, for POS chains, although more than 99% energy consumption reduction can be achieved, the blockchain network's scalability is still challenged, since the chains will have to shutdown when all nodes storage are saturated.

It is evident that all blockchains should be infinitely scalable to be considered "digital gold". This means that expanding the network (adding nodes) should increase the network's performance, reliability and security. This is the motivation for the SMC Blockchain Protocol.

SMC Blockchain Protocol

SMC blockchain protocol aims to solve the scalability challenge by enabling automated parallel processing while enhancing network security and reliability at the same time. The ACAN concept is a perfect fit for this case. ACAN enables automated SMC by forcing the transaction validation processes to form SIMD, MIMD and pipeline clusters in real time.

Unlike other chains, SMC Blockchain Protocol only maintains (R > 1) copies of the ledger on randomly selected nodes. This enables the overall network storage capacity to grow indefinitely by adding more nodes. The value of R constrains the transaction replication overheads to a constant thus accelerating transaction processing throughput by adding nodes. The actual R value can be determined experimentally without stopping service.

The SMC Blockchain network (TOIchain) supports regular, "staking" and "seed" nodes. A regular node is similar to a "light node" in Bitcoin that only receives transactions and forwards them to "staking" nodes. Unlike a Bitcoin "light node", a regular TOI node can optionally store validated blocks and be paid (need a unique wallet address) for the storage and accesses. A "staking" node is a regular node uniquely associated with another wallet address by a "staking contract", such as "50 TOINs for 3 months starting now". The staking contracts are used by randomly selected "seed" nodes to build random transaction validation committees (Prove of Stake consensus protocol).

A "seed" node is TOI Foundation supported service. They serve as the DNS for TOIchain and have all features as a "staking" node where the "staking contract" and wallet address are owned by TOI Foundation. The TOIchain operates on EPOCHs as heartbeats for the network. Each EPOCH corresponds to a randomly selected "validation committee" of "staking" nodes. A randomly selected "seed" node set decides the formation of committee.

Each "validation committee" can have up to 128 validators. Each validator validates local transactions in parallel against the current best chain state. The committee assembles a block of partially validated transactions for committee-wide validation. A majority agreement will decide the fully validated transactions into the new block. The block is considered "confirmed" but not "finalized".

The new block must be validated against the EPOCH-id -1 block before it is "finalized". This step is skipped for the genesis block. The committees must wait until the EPOCH-id -1 block becomes the chain tip. Thus, multiple validation committees form a virtual pipeline at runtime. Finalized blocks match generated EPOCHs. Transactions fail in any stage are removed from the block. There maybe empty blocks where all transactions fail or the network is idling.

Transactions may be replicated before validation to improve the chance of being included into a block by paying more fees. The redundant transactions will fail except for the first one.

When the network has no "staking" nodes, the "seed" nodes become "staking" nodes to keep the network operational. The TOI Foundation receives the transaction fees and validation rewards.

The TOI Foundation maintains the "seed" nodes and the overall network security and safety via automatic network monitoring and maintenance scripts.

SMC POS Consensus

SMC POS consensus protocol randomly selects staking validators without staking pools to deter centralization attempts. SMC POS protocol also has an adjustable replication factor that the network ledger has a fixed replication factor that can be tuned on demand without service interruptions. Therefore, adding nodes, the SMC POS consensus protocol can deliver:

- Byzantine failure resistance

- Fault tolerance

- Program tamper resistance

- Centralization avoidance

- Incrementally better performance, security and reliability

More nodes in the network expands the network storage capacity without increasing replication overheads. More nodes facilitates longer pipeline and higher parallelism for better performances and better randomness for security. Higher R values improve service reliability. Lower R values deliver better performances. Since the number of nodes can be far greater than R, monotonic performance increase can always be achieved. Most importantly, the native SMC protocol supports all these network parameter adjustments without protocol change.

SMC blockchain protocol is also silent on transaction retransmission discipline. Since it is impossible to "double spend" under SMC protocol, transaction retransmission will enable different approval routes without double-spend risks (multiplexing). This ensures seamless integration with legacy blockchain wallets and infrastructures.

Summary

SMC blockchain protocol is the only statistic multiplexed blockchain protocol to date. The SMC technology development history links the blockchain protocol development to stateless HPC (high performance computing) developments. This is the only known technology that can offer decentralized processing for centralized controls, which is the perfect gateway to integrate web2.0 applications for web3.0 infrastructures.

References:

- ↑ https://static.ias.edu/pitp/archive/2012files/Probabilistic_Logics.pdf

- ↑ https://en.wikipedia.org/wiki/OSI_model

- ↑ https://www.iso.org/standard/20269.html

- ↑ https://groups.csail.mit.edu/tds/papers/Lynch/jacm93.pdf

- ↑ https://en.wikipedia.org/wiki/Content-addressable_network

- ↑ https://en.wikipedia.org/wiki/Distributed_hash_table

- ↑ https://en.wikipedia.org/wiki/Chord_(peer-to-peer)

- ↑ https://en.wikipedia.org/wiki/Pastry_(DHT)

- ↑ https://en.wikipedia.org/wiki/Tapestry_(DHT)

- ↑ https://en.wikipedia.org/wiki/Dataflow

- ↑ https://en.wikipedia.org/wiki/Dataflow_architecture

- ↑ https://www.researchgate.net/publication/319996786_Scalability_Dilemma_and_Statistic_Multiplexed_Computing_-_A_Theory_and_Experiment

- ↑ https://en.wikipedia.org/wiki/Brachistochrone_curve

- ↑ J. Shi, Statistic Multiplexed Computing System for Network-Scale Reliable High Performance Services, US PTO, #US 11,588,926 B2, granted February 21, 2023

- ↑ https://www.researchgate.net/publication/319996786_Scalability_Dilemma_and_Statistic_Multiplexed_Computing_-_A_Theory_and_Experiment

- ↑ https://www.researchgate.net/publication/285579358_AnkaCom_A_Development_and_Experiment_for_Extreme_Scale_Computing

- ↑ https://buybitcoinworldwide.com/bitcoin-uptime/